One handy Powershell script template to rule them all

- Eitan Blumin

- Jun 6, 2021

- 5 min read

If you know me, you already know that I'm a huge fan of automation. Therefore, it's natural to assume that I would dabble in Powershell at least once or twice or a few dozen, as a method to implement useful automations. After all, automation is pretty much the whole essence of Powershell in the first place.

As I used Powershell scripts more and more, I learned that there are a few things that are important to have whenever you use such scripts as part of automation (by "automation" I mean any sort of operation done "unattended", i.e. without direct human interaction in real-time).

Here are the main general points that I learned:

Or, if TL;DR and you want to skip to the juicy parts:

Automated scripts must have historical logs

If at any point an automated script fails for some reason, or does not behave as expected, it would be invaluable to have it produce and retain historical logs that could later be investigated for clues as to what it did, and where and why it failed.

Powershell has a few useful cmdlets for this, capable of writing an Output to any sort of destination, such as a log file. For example, Out-File.

However, in my personal experience, nothing beats the level of verbosity offered by a special cmdlet called Start-Transcript.

This cmdlet receives a -Path parameter pointing to where you want to save your log (or "transcript") file, and once you run it, all outputs generated by your script would be saved to that log file. Even if that output came from a system or 3rd-party cmdlet which you have no control over, and even if you did not use Out-File.

It's important to note, though, that it would NOT capture output generated by the Write-Host cmdlet. But its sister cmdlets Write-Output, Write-Verbose, Write-Error, Write-Warning, etc. are all fair game and would be captured by the transcript.

When using system or 3rd-party cmdlets, you should remember to use the -Verbose switch so that those commands would generate useful output. For example Invoke-SqlCmd, Remove-Item, Copy-Item, etc.

Having the output of those cmdlets will help you greatly while investigating historical transcript logs.

Once you're done saving output to your transcript file, you can run the Stop-Transcript cmdlet.

Timestamps are important

Printing out messages is all nice and dandy, but sometimes it's also important to know when those messages were printed. There are all kinds of different reasons why it's important to have a timestamp next to your log messages. For example:

So that you could correlate the execution of specific steps or cmdlets with events and incidents elsewhere in your system, in order to understand their impact.

The duration of specific operations could sometimes have critical implications. For example, when something that should be immediate suddenly takes several minutes to complete (or vice versa), it could mean that something went terribly wrong.

"Performance tuning" your script by detecting which cmdlets took the longest time to execute, and possibly find more efficient alternatives.

With that said, printing out the current timestamp is not always straightforward in Powershell. So, I wrote the following little function for myself that could then be used anywhere within the script:

function Get-TimeStamp {

Param(

[switch]$NoWrap,

[switch]$Utc

)

$dt = Get-Date

if ($Utc -eq $true) {

$dt = $dt.ToUniversalTime()

}

$str = "{0:MM/dd/yy} {0:HH:mm:ss}" -f $dt

if ($NoWrap -ne $true) {

$str = "[$str]"

}

return $str

}

# Example usage:

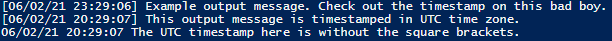

Write-Output "$(Get-TimeStamp) Example output message. Check out the timestamp on this bad boy."

Write-Output "$(Get-TimeStamp -Utc) This output message is timestamped in UTC time zone."

Write-Output "$(Get-TimeStamp -Utc -NoWrap) The UTC timestamp here is without the square brackets."The output of the above would look like this:

Historical logs need to be cleaned up

What, you thought it was enough to just create historical logs and that's it? Those things take up space, buddy. Therefore, it's important to remember to delete those log files when they're too old.

Something like the following code block should do the trick nicely:

Get-ChildItem $logFileFolderPath | Where-Object { $_.Name -like "*.log" -and $_.LastWriteTime -lt $(Get-Date).AddDays(-30) } | Remove-ItemInstalling missing modules

More often than not, you would be using some kind of special module (or several) as part of your Powershell script. For example, DbaTools, or Az.Sql, or whatever else you crazy people use these days.

But could you be bothered to always remember to install those modules before running your automation scripts? If you forget, your scripts would fail. And what if they're already installed? Trying to install them again could cause an error too.

This trick below is based on something I learned from the source code of some of the DbaTools cmdlets. But I find it useful for many other things as well:

$modules = @("Az.Accounts", "Az.Compute", "Az.Sql", "dbatools")

foreach ($module in $modules) {

if (Get-Module -ListAvailable -Name $module) {

Write-Verbose "$(Get-TimeStamp) $module already installed"

}

else {

Write-Information "$(Get-TimeStamp) Installing $module"

Install-Module $module -Force -SkipPublisherCheck -Scope CurrentUser -ErrorAction Stop | Out-Null

Import-Module $module -Force -Scope Local | Out-Null

}

}The above is basically an "if-not-exists-then-install" done on an array of modules. This array can be easily changed in a single line of code.

And so, a script template was born

Taking all of what I learned above, and more, I wrote for myself a handy Powershell script that I use as a "template" of sorts. Every time I need to start writing a new script for automation, I take a copy of that template and add the relevant body of script that I need.

I have a few such scripts that can be downloaded from the Madeira Toolbox GitHub repository:

Let's go over the main sections in these scripts and see what they do:

Params

The "Params" section declares the parameters for the script. These already include parameters that control things such as the path where the transcript logs should be saved, their retention depth in days, and so on. For example:

Param

(

[string]$logFileFolderPath = "C:\Madeira\log",

[string]$logFilePrefix = "my_ps_script_",

[string]$logFileDateFormat = "yyyyMMdd_HHmmss",

[int]$logFileRetentionDays = 30

)You can of course add more parameters for your own use here, as needed.

Initialization

The "initialization" region encompasses all the stuff you'd need to do before getting to the crux of your script. These are things like declaring the Get-Timestamp function, deleting old transcript logs, and starting a new transcript file.

#region initializationInstall Modules

The "install-modules" region would be responsible for making sure all the necessary modules are installed (as mentioned above).

#region install-modulesAzure Logon

In the two template scripts involving Azure modules, I also included an "azure-logon" region responsible for connecting to your Azure account, and switching over to the correct subscription before you start doing anything else.

#region azure-logonMain

The "main" region is where you'd be putting the actual body of your script. Whatever it is you wanted to do, do it here.

#region mainFinalization

The "finalization" region is at the very end of the script. All it does is stop the transcript. But if needed, you could add something like a cleanup code here, if needed.

#region finalizationConclusion

It's important to remember that these "template" scripts are nothing more than good "starting points".

As such, it's likely that you may have to add some of your own changes in order to make them fit whatever it is you intend to do with them.

Don't treat them like a strict form to fill out. But more like a pliable piece of dough that you can turn into any shape or form.

As always, your mileage may vary.

Happy automating!

Managing small payments can be a hassle, but now there's an easier way. With platforms like https://myothello.net/ users can conveniently cash out small payments without delays. Whether it's micro-earnings from apps or small transfers from friends, MyOthello provides a secure and fast solution. No more waiting for large sums to build up get your money when you need it. Explore a smarter, faster way to manage your small payments with myothello today.

Good day! I read about a standalone crash game app and installed https://aviator.in/app during a coffee break. In India, the installation was swift and the tutorial helped me grasp multiplier mechanics fast. I tested both manual and auto cash-outs in a few rounds, noting how each method changed my strategy. My sister, curious from next to me, tried her own bets and cheered when she cashed out at 3x. That spontaneous duo session made the quick rounds more memorable than any long casino stint. I logged off content, having squeezed playful competition into a brief pause.

Hey everyone! Great thread – I totally agree that a universal PowerShell script template can save tons of time, especially for network checks or security configurations. I’ve personally adapted mine to automate firewall rule setups and quick port scans, which really helps during system audits. Would love to see how others are using theirs!On a different note, for those asking about reliable betting platforms — if you’re facing access issues due to regional blocks, especially in places like Bangladesh, I recommend checking out Mostbet 27 Registration — it’s an alternative mirror that keeps your profile synced and offers the same sleek design and bonuses. It’s a handy workaround if you're on mobile and want to keep playing without interruptions.

Very interesting topic, thanks for it! One universal PowerShell script template is a really useful thing, especially when you need to quickly automate common tasks or settings. I would be happy to see examples of its adaptation for working with the network or system security - maybe some of the participants already use such a script in their daily work? By the way, a little off topic, but still: do any of you know of reliable online betting or casino platforms? I'm interested in something with a nice interface and a mobile version.